Chatbots and the Cost of Automation: Hidden Risks for Brands

Chatbots have quickly become one of the most adopted technologies in modern business. From customer support and sales automation to lead qualification and post-purchase engagement, chatbots promise speed, efficiency, and scalability. Brands proudly showcase AI-powered assistants as symbols of innovation and digital maturity.

But beneath this polished surface exists a reality that many businesses fail to examine closely.

The Dark Side of Chatbots is not about rejecting automation, it is about understanding the hidden risks, limitations, and unintended consequences that come with over-reliance on conversational AI. As companies rush to deploy chatbots to stay competitive, many overlook how these systems can silently damage trust, brand perception, data integrity, and even revenue.

This article explores The Dark Side of Chatbots from a business and brand perspective, focusing on real risks that decision-makers, marketers, and founders must understand before going “all-in” on chatbot technology.

The Rise of Chatbots and the Blind Spot Businesses Ignore

The chatbot boom didn’t happen by accident. Customers expect instant responses. Businesses want lower support costs. AI vendors promise smarter, faster, always-available assistants.

However, The Dark Side of Chatbots emerges when speed and automation are prioritized over human context, emotional nuance, and ethical design.

Many businesses assume:

-

“AI will handle it better than humans”

-

“Automation always improves efficiency”

-

“Customers prefer bots over waiting for people”

But user behavior tells a more complex story.

Customers may tolerate chatbots but tolerance is not the same as trust.

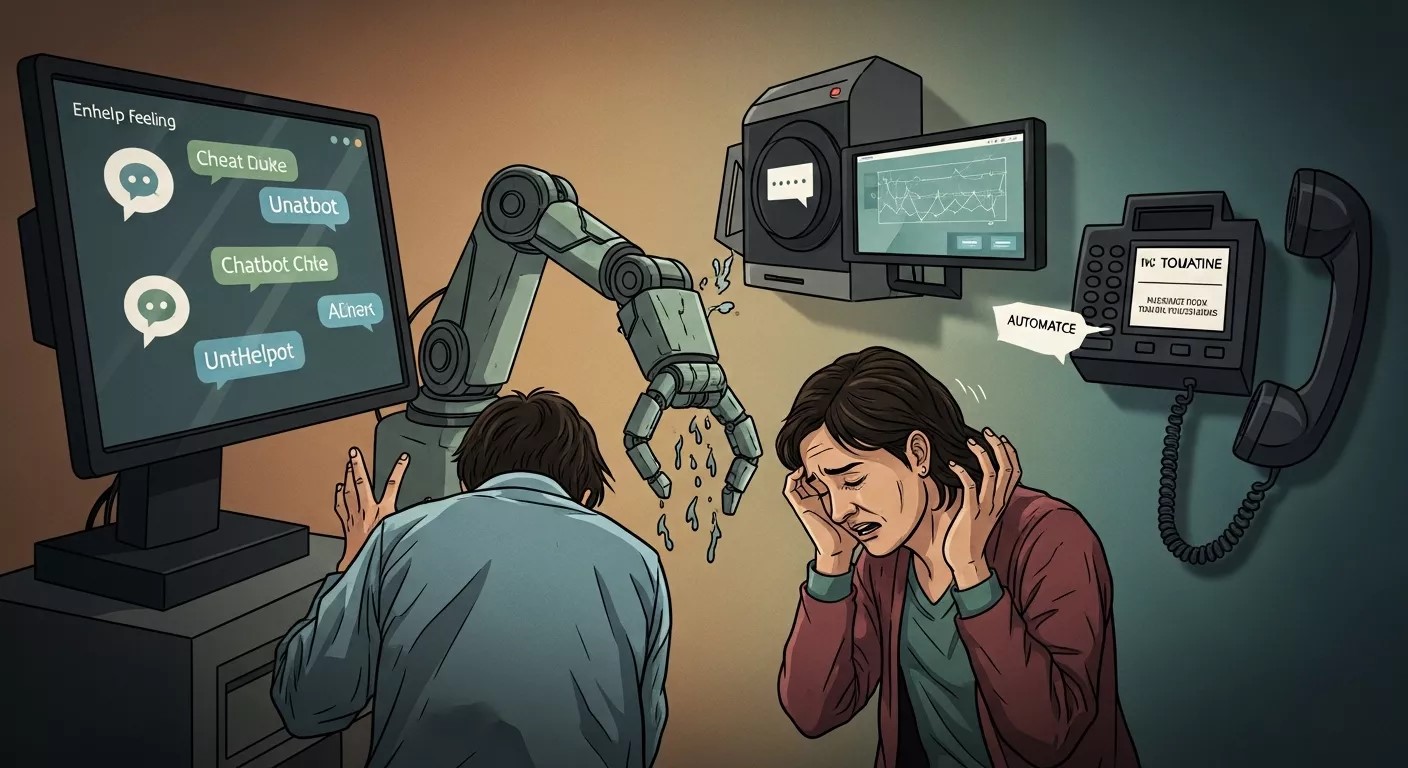

When Automation Replaces Empathy

One of the most overlooked aspects of The Dark Side of Chatbots is emotional disconnect.

Chatbots operate on logic, probability, and pattern recognition. Humans operate on emotion, intent, and context. When customers contact a business, they are often frustrated, confused, or anxious. A chatbot that responds with scripted politeness but fails to understand emotional urgency can escalate dissatisfaction instead of resolving it.

This is where the conversation around Emotionally Intelligent Chatbots becomes critical. Most chatbots today simulate empathy through predefined responses but simulation is not the same as genuine understanding.

When customers feel unheard or misunderstood, brand trust erodes quietly.

Brand Voice Dilution: When Every Company Sounds the Same

Another major aspect of The Dark Side of Chatbots is brand homogenization.

Many businesses deploy off-the-shelf chatbot frameworks with minimal customization. The result? Identical tones, generic phrases, and robotic language across industries.

Instead of strengthening brand identity, chatbots often:

-

Remove personality

-

Flatten brand voice

-

Reduce emotional connection

Over time, customers stop associating warmth, reliability, or uniqueness with the brand—and start associating it with “just another bot.”

This is one of the subtle but long-term risks hidden inside The Dark Side of Chatbots.

Overconfidence in AI Accuracy

AI chatbots are impressive but they are not infallible.

One dangerous assumption driving The Dark Side of Chatbots is the belief that AI responses are always correct. In reality, chatbots:

-

Can hallucinate information

-

Misinterpret user intent

-

Provide outdated or incorrect answers

-

Fail in edge-case scenarios

When a chatbot confidently delivers wrong information, customers often blame the brand not the technology.

This creates legal, reputational, and operational risks that many businesses underestimate while embracing automation.

The Silent Threat of Data Exposure

Among the most serious components of The Dark Side of Chatbots is data vulnerability.

Every chatbot interaction involves data names, emails, preferences, complaints, sometimes even financial or personal information. Poorly designed systems can expose this data through:

-

Weak authentication

-

Improper storage practices

-

Third-party integrations

This is where Chatbots and Data Privacy becomes a board-level concern, not just a technical one.

Customers trust brands with their information. A single chatbot-related breach can permanently damage that trust.

Privacy Expectations vs. Reality

Modern consumers are increasingly aware of digital privacy. They ask questions like:

-

Who is storing my data?

-

How long is it kept?

-

Is my conversation being monitored?

The Dark Side of Chatbots intensifies when businesses fail to transparently answer these questions.

Many chatbot deployments lack clear disclosure policies. Some collect data silently. Others retain conversations indefinitely. This gap between expectation and reality fuels skepticism and disengagement.

That’s why Privacy & Security in Web Chatbots is no longer optional it’s foundational to sustainable AI adoption.

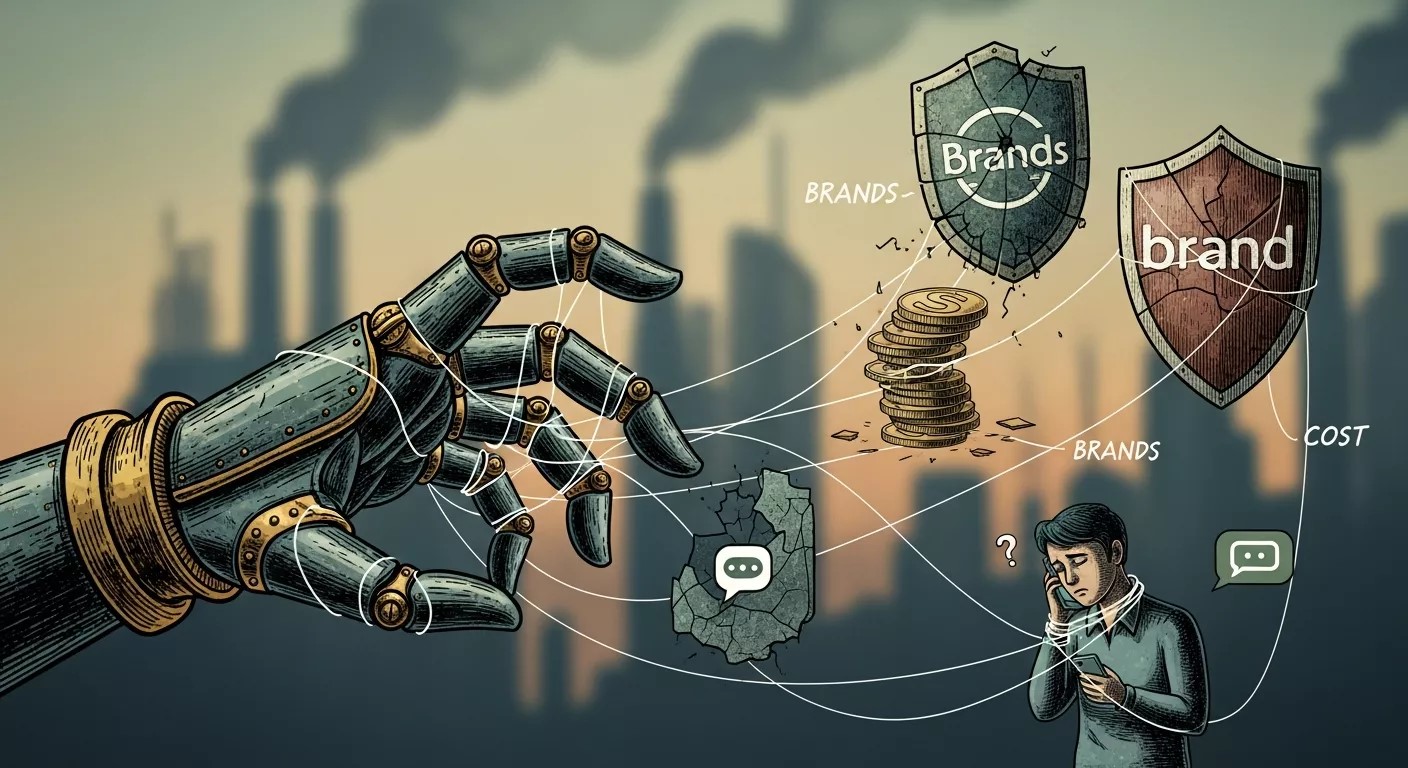

The Illusion of Cost Savings

Chatbots are often sold as cost-cutting tools. And initially, they are.

But The Dark Side of Chatbots reveals itself when hidden costs surface:

-

Increased churn due to poor experiences

-

Higher escalation rates to human agents

-

Brand damage requiring expensive recovery campaigns

-

Legal risks from misinformation or data misuse

Automation without strategic oversight often shifts costs instead of eliminating them.

Reduced Human Accountability

When something goes wrong in a chatbot interaction, who is responsible?

This ambiguity is a defining feature of The Dark Side of Chatbots.

Was it the AI vendor?

The training data?

The developer?

The business?

Customers don’t care. They hold the brand accountable.

Without clear governance, chatbot systems create accountability gaps that expose businesses to reputational and legal risk.

Customer Frustration from Over-Automation

Many brands make the mistake of forcing chatbot interactions even when human support would be more appropriate.

This leads to:

-

Endless loops

-

Poor escalation paths

-

Repetitive questioning

-

Delayed resolutions

What starts as efficiency becomes friction.

This pattern reinforces The Dark Side of Chatbots, where automation creates barriers instead of removing them.

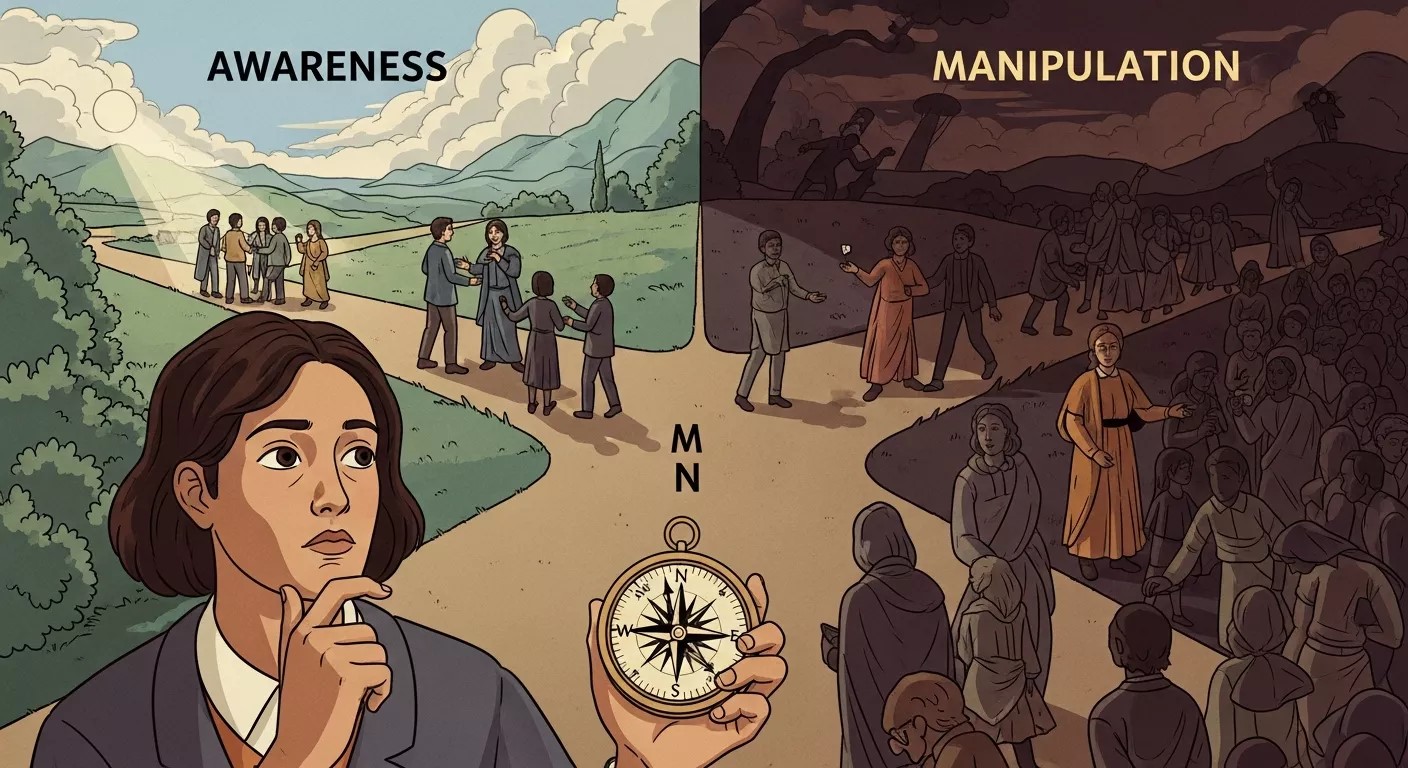

Ethical Concerns and Manipulation Risks

As chatbots become more persuasive, ethical questions arise.

Some systems are optimized to:

-

Push sales aggressively

-

Deflect refunds

-

Delay cancellations

-

Influence decisions subtly

When users realize they’re being nudged or manipulated, trust collapses.

Ethical misuse is one of the fastest-growing dimensions of The Dark Side of Chatbots, especially as conversational AI becomes more sophisticated.

Training Data Bias and Brand Damage

Chatbots learn from data. Data reflects human bias.

If training datasets include biased, outdated, or culturally insensitive material, chatbots may generate responses that:

-

Offend users

-

Reinforce stereotypes

-

Exclude certain groups

This creates PR crises that feel sudden but are rooted in neglected AI governance.

Bias amplification is a core issue within The Dark Side of Chatbots that brands often discover too late.

Loss of Deep Customer Insights

Ironically, while chatbots collect massive amounts of data, many businesses fail to extract meaningful insights.

Raw conversation logs without proper analysis lead to:

-

Missed customer sentiment signals

-

Ignored pain points

-

Shallow understanding of user needs

This analytical blindness is another overlooked layer of The Dark Side of Chatbots.

Dependency Risk: When Systems Fail

What happens when:

-

Servers go down?

-

APIs fail?

-

AI models degrade?

Businesses overly dependent on chatbots face operational paralysis when systems malfunction. Human teams, downsized or undertrained, may struggle to recover quickly.

This fragility is a structural weakness embedded in The Dark Side of Chatbots.

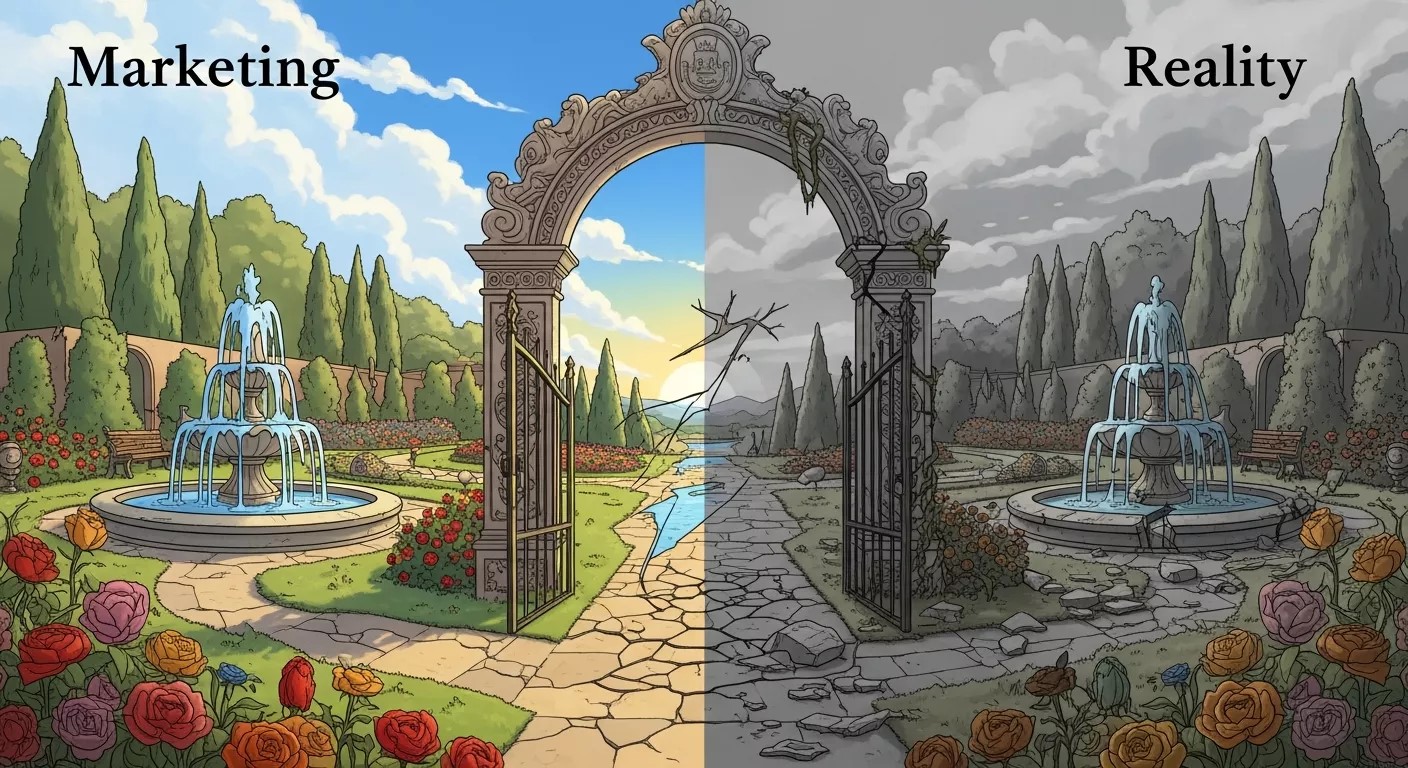

Misaligned Expectations Between Marketing and Reality

Marketing often oversells chatbot capabilities:

-

“24/7 intelligent support”

-

“Human-like conversations”

-

“Instant problem resolution”

When reality falls short, disappointment sets in.

Expectation gaps accelerate dissatisfaction and reinforce negative perceptions associated with The Dark Side of Chatbots.

Chatbots as a Brand Trust Test

Every chatbot interaction is a trust moment.

Handled well, chatbots can indeed become AI Chatbots Your Secret Weapon enhancing experience, speed, and accessibility.

Handled poorly, they become silent brand destroyers.

Understanding The Dark Side of Chatbots allows businesses to design systems that support not replace human intelligence.

The Strategic Way Forward

Recognizing The Dark Side of Chatbots does not mean abandoning AI. It means:

-

Designing with empathy

-

Maintaining human oversight

-

Prioritizing transparency

-

Investing in ethical AI practices

-

Treating chatbots as assistants, not replacements

The future belongs to hybrid systems where automation and humanity coexist.

Human Skill Erosion and Overdependence on Automation

One of the long-term consequences often ignored in discussions about The Dark Side of Chatbots is the gradual erosion of human expertise. As businesses rely more heavily on chatbots for customer-facing interactions, human teams are used less frequently for problem-solving and decision-making.

Over time, this creates operational dependency. When complex issues arise that require judgment, empathy, or creative resolution, teams may struggle to respond effectively. The chatbot becomes the default brain of the operation, while humans are reduced to reactive roles rather than strategic ones.

This imbalance weakens organizational intelligence and makes businesses vulnerable when automated systems fail or underperform.

Cultural and Language Context Failures

Chatbots are often deployed globally, but language translation does not equal cultural understanding. A major element of The Dark Side of Chatbots lies in their inability to grasp regional nuances, cultural sensitivities, and contextual meaning.

What sounds neutral in one market can appear rude, dismissive, or even offensive in another. Humor, sarcasm, and emotional cues are especially difficult for AI systems to interpret correctly. When these failures happen, users associate the mistake with the brand not the technology.

For global businesses, cultural misalignment can quietly damage reputation in key markets without immediate visibility.

Growing Customer Awareness and Manipulation Fatigue

Modern users are far more aware of how automated systems work. When customers sense that a chatbot is intentionally delaying resolution, steering conversations away from refunds, or using scripted empathy to influence decisions, trust begins to erode.

This phenomenon, often referred to as manipulation fatigue is a rising dimension of The Dark Side of Chatbots. Instead of feeling supported, customers feel managed.

Once this skepticism sets in, even well-designed chatbot interactions are viewed with suspicion, making future engagement harder and less effective.

Regulatory Pressure and Compliance Uncertainty

AI regulation is evolving rapidly across regions, and chatbots sit directly in the regulatory spotlight. New requirements around transparency, data handling, consent, and automated decision-making are emerging faster than many businesses can adapt.

A chatbot that is compliant today may become a legal liability tomorrow. This regulatory unpredictability adds another layer to The Dark Side of Chatbots, especially for organizations operating across multiple jurisdictions.

Without proactive governance and continuous monitoring, businesses risk sudden compliance failures that can lead to fines, legal challenges, and public scrutiny.

Conclusion (No Conclusion, Just Perspective)

The chatbot conversation is no longer about whether to use AI, but how responsibly it is implemented.

Businesses that ignore The Dark Side of Chatbots risk more than operational inefficiency, they risk trust, loyalty, and long-term brand equity.

Those who confront it honestly will build smarter, safer, and more human digital experiences.

Frequently Asked Questions (FAQ)

What is meant by the dark side of chatbots?

The dark side of chatbots refers to the hidden risks of chatbot adoption, including trust erosion, poor customer experience, data privacy issues, and negative brand impact caused by over-automation.

Why are businesses increasingly concerned about chatbot risks?

As chatbots handle more customer interactions, mistakes become more visible. Incorrect responses, lack of empathy, and data mishandling can directly affect brand reputation and customer trust.

Can chatbots harm customer experience?

Yes. When chatbots fail to understand intent or block access to human support, they can frustrate users and create negative experiences instead of solving problems.

How do chatbots affect brand trust?

Chatbots influence brand trust through tone, accuracy, and transparency. Poorly designed bots can make a brand seem careless, unresponsive, or unreliable.

Are AI chatbots secure for handling customer data?

AI chatbots can pose security risks if data storage, encryption, and access controls are weak. Proper governance is essential to protect sensitive customer information.

What data privacy issues are linked to chatbots?

Chatbots often collect personal data during conversations. Without clear consent, disclosure, and retention policies, this data can be misused or exposed.

Can chatbots fully replace human customer support teams?

No. Chatbots work best for repetitive tasks, but complex or emotional issues require human understanding, judgment, and accountability.

What are the biggest long-term risks of chatbot dependency?

Overdependence on chatbots can reduce human expertise, weaken problem-solving skills, and create operational risks if automated systems fail.

How can businesses use chatbots responsibly?

Responsible chatbot use involves human oversight, clear escalation paths, ethical design, regular audits, and alignment with brand values and customer expectations.

Are chatbots still worth using despite these risks?

Yes. When implemented strategically, chatbots can improve efficiency and support growth. The key is balancing automation with human intelligence.

AI Chatbots for Customer Retention Management

Transforming Employee Onboarding with AI Chatbots: A Step-by-Step Guide

Emergency Response Chatbots: Revolutionizing Crisis Management with AI

Revolutionizing Recruitment: How Chatbots Streamline Hiring Processes and Elevate Talent Acquisition